By Mark Schaefer

It was quite a thrill for me to hear Sir Tim Berners-Lee speak before a capacity crowd at Dell EMC World in Las Vegas. Berners-Lee is universally regarded as the founder of the world wide web and is also the director of the World Wide Web Consortium, which oversees the continued development of the Web.

Berners-Lee’s presentation was on the future of artificial intelligence and it was one of the most anticipated talks of the Dell EMC event. Here are some of the highlights of the speech. (PS I’m lazy. From here on out Tim Berners-Lee is TBL!)

AI is already here

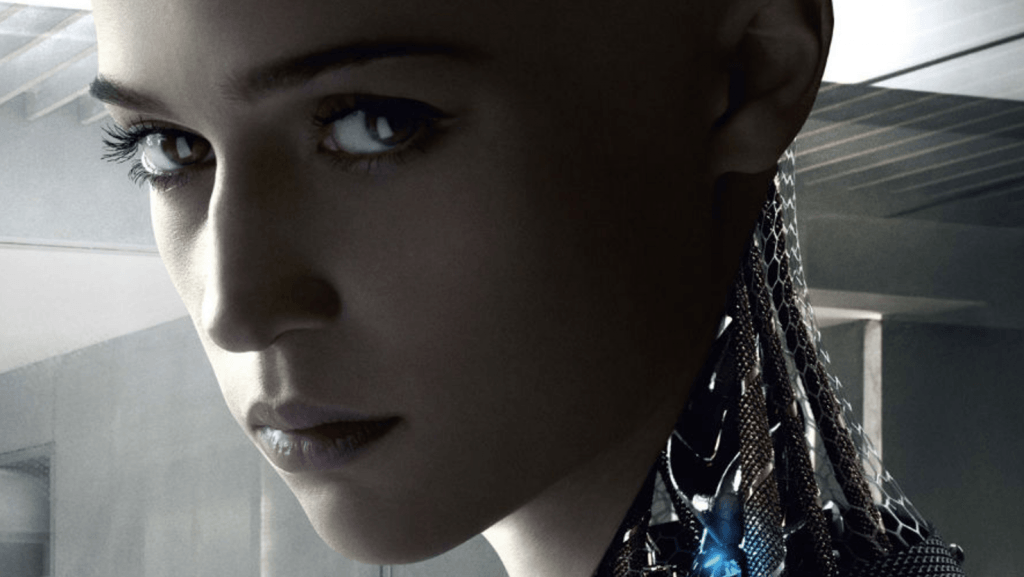

TBL dismissed the notion that AI will threaten us in the form of a hulking Terminator or a beautiful robot like Ex Machina. He said it is already here.

“Every computer is AI,” he said. “We’re trying to get computers to do what we don’t want to do. The bane of my existence is working on projects that should be done by a computer. Many humans are placeholders, doing work just until the robots are ready to take over for us.”

The internet founder said we are in an age where computers are truly being “trained” by learning on their own, rather than being coded by humans. For example, it is much more efficient to let a computer learn how to play a game on its own, rather than coding in all the possible moves, or observing a human playing a game. Computers are even creating their own code to solve problems beyond their original programming. They are learning to improve on their own. Which … could be a problem if it gets out of control.

TBL organized his talk along the lines of the three common fears of an age of Artificial Intelligence:

1. Robots taking our jobs

“A self-driving car, an automated factory, a smart tractor — all of these things could seem threatening if it replaces a human worker,” he said. “And indeed there is going to be a big shift coming. Maybe some will even consider it a crisis in employment because we will get to the point where machines are doing a huge number of our jobs. The economy will be fine because the work is getting done but there will be fewer people working in it.

“Today, most of the jobs being replaced by computers are lower level, but eventually it will be more advanced — Newspaper editors, lawyers, doctors. AI could become a great front page editor … in fact it would create a great editorial system.

“We will need to consider a base universal wage, as is being considered by some Scandinavian governments. I think that is an interesting idea. But I think the bigger issue is keeping a permanently un-employed population occupied. People will migrate to other pursuits but it will not be work in a traditional sense. How do we learn to respect people who no longer work? How do we get them to respect themselves?”

2. Robots act as judge and jury

He told a story about how computers use available data to learn and make observations about our society. A super intelligent machine would have to analyze available data to make inferences about the world. He said AI might make incorrect assumptions about an ethnic group, for example, because there are biases built into the raw data.

Another example he used was news editing. “Today Facebook has hired thousands of people to try to find fake news and get rid of it. Maybe we can train AI to do that. But the editorial decision is based on what? The computer may use historical information to make judgments about the news but it would be guided by human bias in the previous editing decisions.

“The big issue is fairness,” he said. “We have mathematical models that show that even algorithms are unfair if results are based on human-generated data. By definition, if a decision is fair to one point of view, it is unfair to another. What’s fair? Tricky. Very tricky.”

The internet founder paused at this point in his presentation to slam social media. “Is the social networking idea actually working? Is it really helping humanity? Is it spreading hate or love? Is something like targeted advertising even ethical?”

3. The singularity

The “singularity” is the hypothesis that the invention of artificial intelligence will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization. According to this hypothesis, artificial intelligence would enter a “runaway reaction” of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful super intelligence that would far surpass all human intelligence.

TBL said that this singularity will happen, most likely within the next 50 years, and that we will certainly need some sort of measures in place to secure civilization. He pointed to the famous “three laws of robotics” first presented by science fiction writer Isaac Asimov in 1942:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

“The flaw of this thinking is that there is an underlying assumption that robots are completely deterministic and rational. That is not possible. The moment you create robots that can have a conversation, you are building in judgments. So this is one of the things to watch for.

“Most of the people in the field like Bill Gates and Elon Musk say it is likely we will have a robot smarter than us and it is not obvious what will happen in that case. It’s a genie in a bottle. And maybe the person who controls the genie will be very powerful.”

Super intelligence in the cloud

TBL said the threat of the singularity won’t be from a hulking Terminator or a beautiful smart robot like in Ex Machina. It’s likely that super intelligence will be in the company cloud.

“I don’t think the AI threat of the future will be a gorgeous female humanoid but we seem to be obsessed with that idea from the movies. There is quantum intelligence sitting right now on the cloud. It does not have blue eyes but it is very powerful.

“Let’s imagine what is happening in the stock trading industry. To accomplish programmed, fast trading, this is something that can only be done by a computer. In essence we have turned over a large portion of that business to computers. Our businesses want computers to do smart things, do more efficient things. We want our computers to be the best so we can survive in business. We are training our computers to be the best and survive. Computers will create new programs and even new companies to do their jobs better, maybe with slightly different parameters. AI will create its own programs. In fact, computers will be able to create better, faster programs than humans.

“Even today, our sales people are being trained and optimized by AI because the AI will know what strategies work the best. Human behaviors will be determined by AI because the bonuses will be determined by AI. Computers will be programming the people.

“The fact is that AI in your data centers right now may be the technology that becomes dominant. Technology in many cases is already running things and dominating humans.

AI as destiny?

“Many think ‘well, as long as humans are in charge we’ll be OK. They’ll keep the intelligence in check.’ But we cannot be assured that companies with people in charge will do the right thing. We have a history where people cannot be trusted to make the right decision. Dystopia will be much more boring than a cute robot taking over the world. It will be a company program in the cloud.

“Humans are creating a world for themselves and it’s very easy to write a dystopian narrative that imagines how an AI super intelligence will create a world optimized for itself and accidentally we all get wiped out. Another view is that we will be their pets. Their intelligence compared to us will be like our intelligence compared to a dog. We can communicate with dogs but only in a very basic emotional way, and we look kindly on them. In this utopian environment the machines swoop in and take our nukes and protect us. The truth? It will probably be somewhere in between these scenarios.

“There will have to be controls in place but there is another philosophy that claims the purpose of humans is to create this super intelligence. We have been on an unstoppable path throughout history to create things that augment our own human capabilities. Some people believe this not something to be feared, but our true human destiny. They believe we are here to create things that are better than us to solve the problems we can’t solve. That we have to create this intelligence to save ourselves.”

Disclosure: I attended the Dell EMC World event with my expenses paid by Dell. I have also worked for Dell to produce a number of content properties including a new podcast called Luminaries.

Mark Schaefer is the chief blogger for this site, executive director of Schaefer Marketing Solutions, and the author of several best-selling digital marketing books. He is an acclaimed keynote speaker, college educator, and business consultant. The Marketing Companion podcast is among the top business podcasts in the world. Contact Mark to have him speak to your company event or conference soon.

Mark Schaefer is the chief blogger for this site, executive director of Schaefer Marketing Solutions, and the author of several best-selling digital marketing books. He is an acclaimed keynote speaker, college educator, and business consultant. The Marketing Companion podcast is among the top business podcasts in the world. Contact Mark to have him speak to your company event or conference soon.